Processor SQL on CSV

SQL stands for Structured Query Language, it lets you access and manipulates data in databases using Data Manipulation Language statements. For purposes of the SQL on CSV processor, Meiro Integrations treats CSV file as a table and allows you to run SQL queries on it.

This is the fastest way to run a relatively simple query, as the data that you are running on does not leave Meiro Integrations and the query runs inside the configuration container

Requirements

For working on SQL on CSV processor you need to have experience with SQL language and to be comfortable enough in writing SQL queries to transform, filter and join tables. You need to be familiar to include (but not limited to):

- SELECT statements

- JOIN clauses

- SQL functions

This component uses the SQLite library.

Learn more: Official SQLite documentation, SQL As Understood By SQLite, SQL Tutorial

Features

SQLite

The SQL on CSV processor runs on the SQLite library. Its main features are:

- Self-contained - it requires minimal support from the operating system or external library. This makes SQLite usable in any environment.

- Serverless - SQLite does not require a server to run, it is integrated with an application that accesses the database.

- Zero-configuration - it doesn’t have any configuration files or server processes to manage.

- Transactional - transactions in SQLite are fully ACID-compliant.

All these features of SQLite make SQL on the CSV processor a good choice for running relatively simple transformations on data that don’t need any complex analysis.

Multiple queries

The SQL on CSV processor can run multiple queries in the same configuration, the result of each query is written into a separate CSV file.

Data In/ Data Out

|

Data In |

All files for processing should be in CSV format and located in the folder in/tables. Files should have headers, if your file does not have a header, you can add it using the Command Line Interface Code processor. |

|

Data Out |

Output files in CSV format are written in the folder out/tables. |

Learn more: about folder structure in configuration please refer to this article.

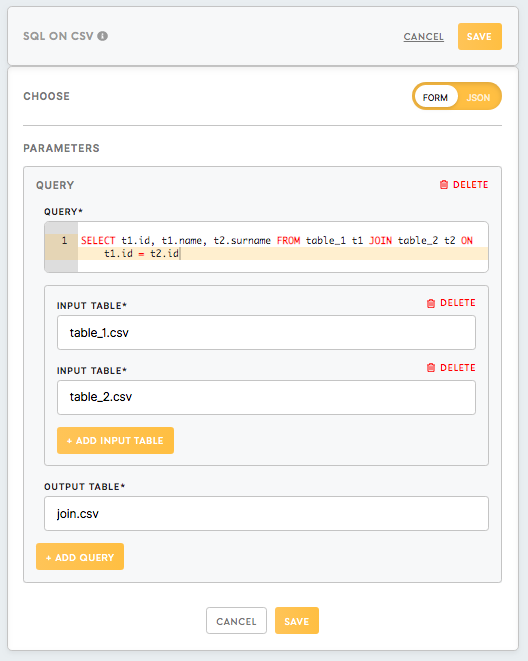

Parameters

| Query (required) |

Input a SQL query you want to run for table transformation. Learn more: How to search & replace within a code editor Remember: It is possible to add more than one query in the configuration, all fields described below are applicable to each query you write. |

| Input table (required) |

Define the full name of the file (with the extension) and its path in this field. Be aware, that the SQL on CSV processor implies that your input files are located in the folder For example, for Remember: You can use more than one file as input data, they will be treated in the query as separate tables. |

| Output table (required) | Enter the name of the output file that you wish to use to write the result of the query after running the configuration. The file will be written in the folder /data/out/tables/. |

Query requirements

|

Syntax |

SQL on CSV Processor runs on the SQLite, so the query should meet SQLite language syntax requirements.

Warning: Do not put a semicolon at the end of the query - this will save you a lot of debugging time, believe us. |

|

FROM clause |

When you use as an input only one table, you can skip the

When defining the table you select data from, use its name without specifying the extension and its path. For example, if your input file’s name is titanic.csv and it is located in |

|

Temporary tables |

Sometimes you need to create a temporary table to use it as input data for your resulting query. The most convenient way to do this is to use WITH clause and create a temporary table in your query which will be removed by SQLite after running the query.WITH the clause allows you to name the temporary table and to reference it in your query multiple times using its name. This way you can simplify the code, make it more readable and avoid multiple subqueries which makes the query less expensive in terms of performance.The example below shows how to create a temporary table from two input tables and use it in your query. |

|

SQL on CSV Sandbox |

To avoid testing the queries in the configuration, you can recreate SQL on CSV environment on your computer and write and test queries in your own sandbox.

Learn more: find out how to do it in this tutorial. |

Example

In Example 2 for the Python Code processor, it was shown how to split the Titanic dataset into 2 sets (male and female passengers). In this example, we will use these 2 tables to count how many passengers survived.

Use example 2 to set up the Python processor configuration based on this article. Then connect Python processor configuration with SQL on the CSV processor. As a result, files titanic_filter_male.csv and titanic_filter_female.csv will appear in the Data In bucket of Processor SQL on CSV configuration.

Query:

WITH survived as (

SELECT * FROM TITANIC_FILTER_MALE tm

WHERE tm.Survived = 1

UNION

SELECT * FROM TITANIC_FILTER_FEMALE tf

WHERE tf.Survived = 1

)

SELECT COUNT(*) FROM survivedInput Table:

titanic_filter_male.csvtitanic_filter_female.csv

Output Table:

survived.csv

After running the query you should see the result file survived.csv in the Data Out bucket with the header and one row:

COUNT(*)

342Learn more: Multiple chained queries in SQL on CSV processor

No Comments