Connector Kafka

Kafka, developed by the Apache Software Foundation, is an open-source distributed event streaming platform designed to handle large volumes of real-time data efficiently. Kafka's architecture comprises producers that send messages to topics and consumers that subscribe to these topics to process the messages. This connector facilitates the seamless export of data from Kafka to Meiro CDP, enabling organizations to integrate and analyze real-time data streams within their customer data ecosystem.

Learn more: about Apache Kafka here and here.

Requirements

In terms of authentication and communication encryption, the component supports the following modes of operation:

- PLAINTEXT: No authentication and no encryption

- SASL_SSL: Authentication with Kafka broker using SASL PLAIN mechanism

- SSL: Authentication and broker communication encryption using SSL/TLS certificates

Features

Only Avro messages payloads can be loaded from Apache Kafka to Meiro CDP.

Data In/Data Out

Learn more: about folder structure please go to this article.

Parameters

Connection settings

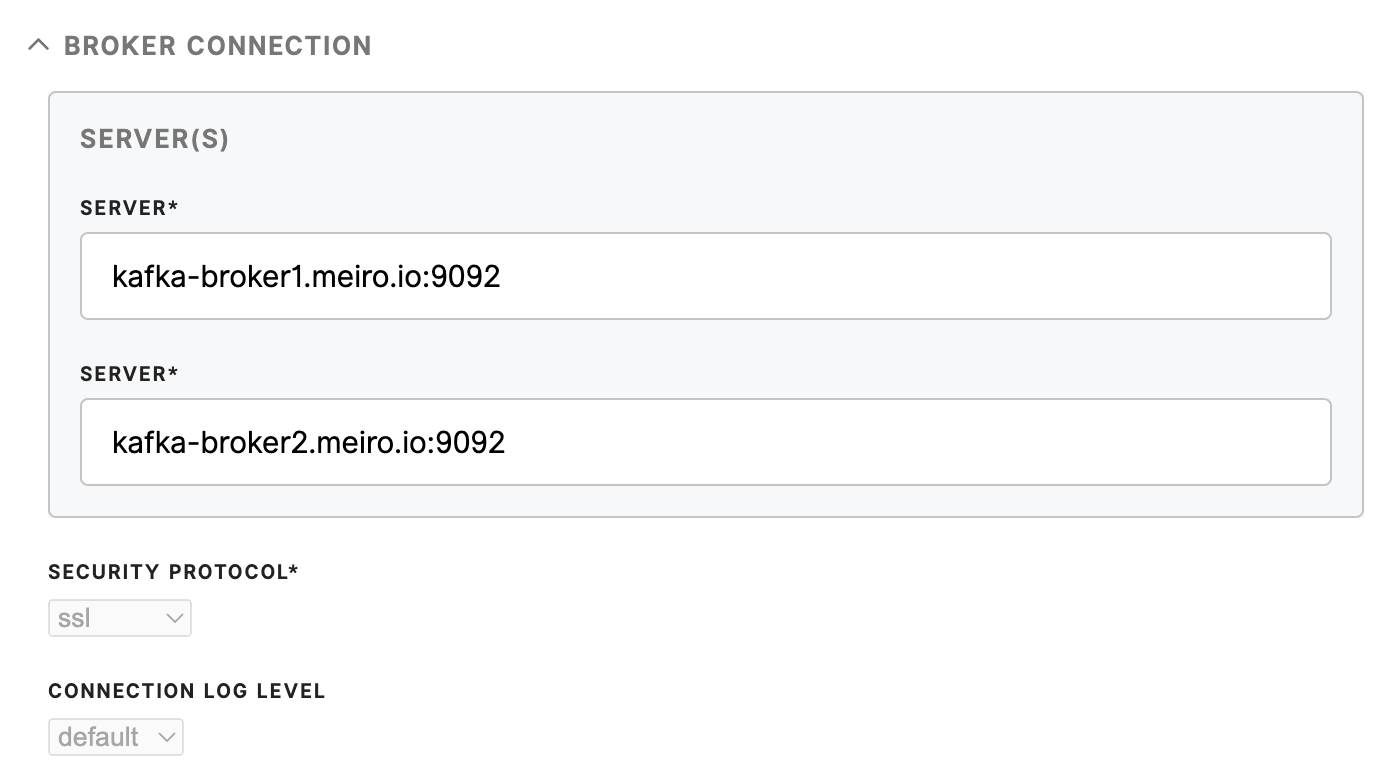

To connect Kafka you need to know its Server and Security protocol.

|

Server(s) (required) |

List of Kafka brokers the connector should attempt initial connection with |

|

Security protocol (required) |

Authentication and encryption protocol the connector should use for communication with Kafka brokers

|

|

Connection log level |

Log level for diagnostic logging on Kafka connection. Value is passed to configuration property debug for librdkafka. Possible options:

|

Security settings

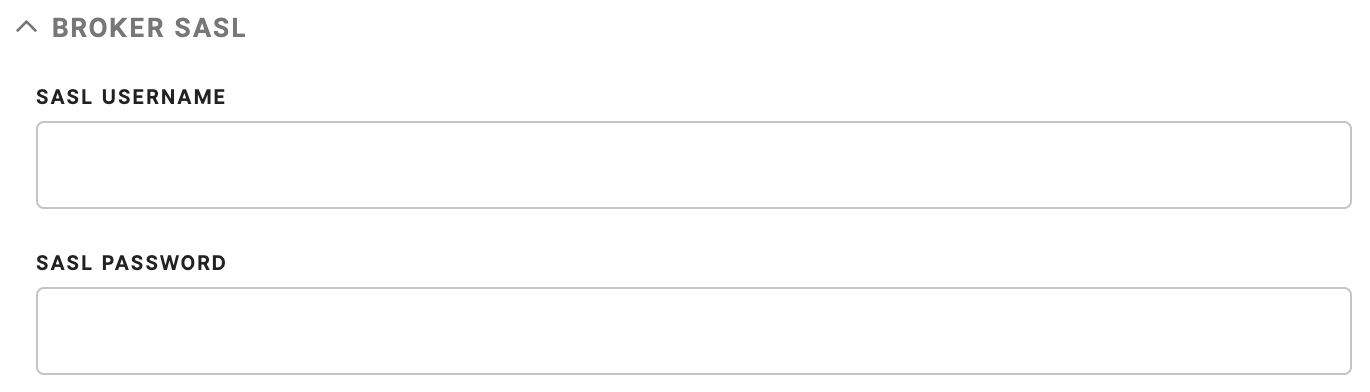

|

SASL username |

SASL Username / API key for SASL PLAIN authentication mechanism for Kafka Broker |

|

SASL password |

SASL Password / API secret for SASL PLAIN authentication mechanism for Kafka Broker |

|

Broker’s CA certificate |

CA certificate string (PEM format) for verifying the broker's key. Passing a directory value (for multiple CA certificates) is not supported. Value are passed to ssl.ca.pem in librdkafka |

|

Client’s private key |

Client's private key string (PEM format) used for authentication. Value is passed to ssl.key.pem in librdkafka |

|

Client’s public key |

Client's public key string (PEM format) used for authentication. Values are passed to ssl.certificate.pem in librdkafka |

Schema Registry

|

Schema Registry URL |

Avro schema registry URL http(s)://kafka:8081 |

|

Schema Registry SASL username |

SASL Username / API key for SASL PLAIN authentication mechanism for Schema Registry |

|

Schema Registry SASL password |

SASL Password / API secret for SASL PLAIN authentication mechanism for Schema Registry |

|

Schema Registry CA certificate |

CA certificate string (PEM format) for verifying the Schema Registry's key. Required when HTTPS is enabled. |

|

Schema Registry client’s private key |

Client's private key string (PEM format) used for authentication. |

|

Schema Registry client’s public key |

Client's public key string (PEM format) used for authentication. |

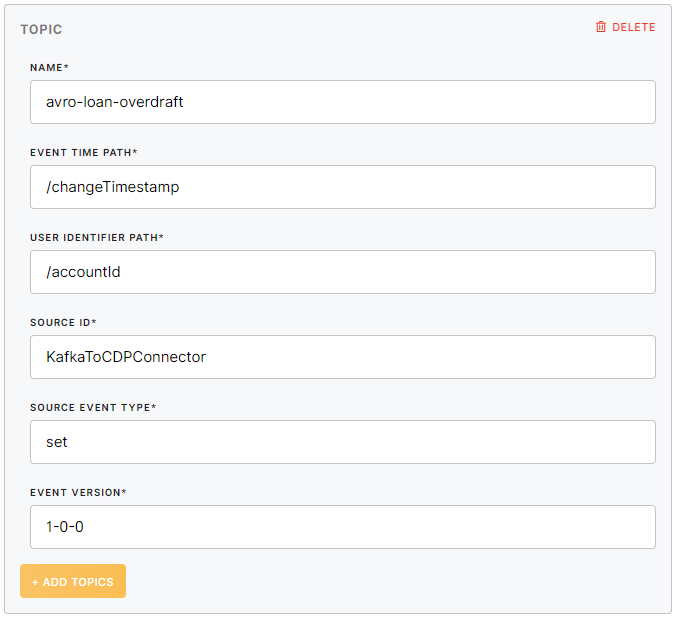

Topics (list)

Define which topic you wish to upload to CDP.

|

Topic name (required) |

Name of the Kafka topic, where the messages will be consumed from. The topic can be specified for each separate output event stream to CDP. If topics use different schemas, schemas will be dynamically discovered and used accordingly. |

|

Event Time Path (required) |

JSON Path expression to search for in consumed Kafka messages to use as a CDP event timestamp (e.g. /changeTimestamp) |

|

User Identifier Path (required) |

JSON Path expression to search for in consumed Kafka messages to use as a CDP event user identifier (e.g. /accountId) |

|

Source ID (required) |

CDP Event Source ID corresponding to a Source defined in Meiro CDP. |

|

Source Event type (required) |

For example: set, created, updated, moved, deleted, etc. |

|

Event Version (required) |

CDP Event version corresponding to an Event Version defined in Meiro CDP. |

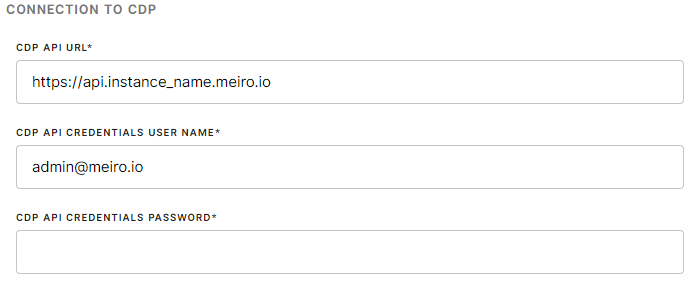

Connection to CDP settings

|

CDP API URL (required) |

Typically it is an endpoint feed. For example, the absolute URL is https://instance_name.meiro.io/api |

|

CDP API Credentials User Name (required) |

Account name |

|

CDP API Credentials User Name Password (required) |

Account password |

No Comments