Processor Python from Git repository

The Python from Git repository processor allows you to run a Python code located in a Git repository. Meiro clones the Git repository into the /data/repository folder and runs the code in the configuration.

The code repository is a file archive and a web hosting platform where a source of code for software, web pages, and other projects, is kept publicly or privately. Git is a version control system for tracking changes in a project, or in a set of files to collaborate effectively with a developer team or to manage a project. It stores all the information about the project in a special data structure called a Git repository. This is a Git directory located in your project directory.

Python is an interpreted high-level programming language for general-purpose programming. Because of its convenience, it is becoming a popular tool for solving data analytics problems such as data cleaning and data analysis.

Requirements

When setting up a configuration for a Python from Git repository component, you will need an account in any version-control platform that supports Git (most common are GitHub, BitBucket, GitLab) with a created repository containing Python script in it.

To work with Python from Git Repository component comfortably, you need to be familiar with:

- Programming in general

- Python syntax (enough for writing simple scripts)

- Git, a version control system

- any version-control platform supporting git (for example, GitHub).

Topics in Python you need to be familiar with include (but are not limited to):

- Data structures

- Control Flow tools

- Working with files (opening, reading, writing, unpacking, etc.)

- Modules and packages

We recommend that you begin with the official tutorial if you are not familiar with Python syntax but already have experience programming in other languages. If you are a complete novice in programming, we suggest that you check the tutorials for beginners.

Useful links: Python tutorial, Official Python documentation, Python Package Index

Useful links for a version-control platform: Git handbook - what a version control system, How to create a repository in GitHub, GitHub flow, Git official documentation

Features

OS Debian Jessie

Version Python 3.6

Packages pre-installed

apache-libcloud, awscli

beautifulsoup4, boto

cx_Oracle

flake8

GitPython, google-api-python-client, google-auth-httplib2, google-cloud-bigquery, google-cloud-storage

html5lib, httplib2

ijson

ipython

Jinja2, jsonschema

lxml

matplotlib, maya

numpy

oauthlib

pandas, pandas2, pymongo, pytest, python-dateutil, PyYAML

requests, requests-oauthlib

scikit-learn, scikits.statsmodels, scipy, seaborn, simplejson, SQLAlchemy, statsmodels

tqdm

urllib3

Data In/Data Out

Data In

Files for processing and transformation can be located in in/tables/ (CSV files) or in/files/ (all other types of files) folder depending on the previous component in the dataflow and the type of the file.

Data Out

Output files should be written in out/tables (CSV files) or out/files (all other types of files) folder depending on the need for the next component and the type of the file.

Learn more: about folder structure in configuration here.

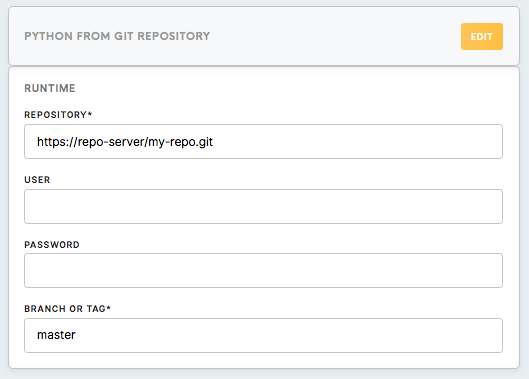

Runtime

Repository (Required)

This is the link to the Git repository that you are planning to work with.

To find it on GitHub, click on the Clone or Download button in the repository and copy the displayed link.

User (Optional)

Your account name on a version control platform (required only for private repositories).

Password (Optional)

The password to your account on a version control platform (required only for private repositories).

Branch or Tag (Required)

The name of the branch or tag of the Git repository you are using.

Read more: about the concept of branches in Git please refer to this article.

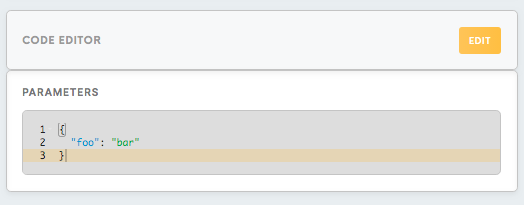

Code Editor

Parameters

The Parameters field is the property in config.json file, which allows you to keep the necessary values and to make them accessible from the script. It is supposed to be in a JSON format and represents a collection of property-value pairs. Parameters are accessible via property “parameters”.

Parameters can be useful in different cases, such as:

- Keeping sensitive information, such as a username and password or API key for authentication on a third-party platform outside your code repository.

- Keeping parameters of the test environment, so after moving to a stage or production phase, you can change parameters fast without changing the script.

Use parameters to keep whatever values you want to make it easily accessible and changeable throughout the Meiro platform. This is demonstrated in the example section below.

How to search & replace within a code editor

Requirements to the script

Structure of the project

The file in the repository containing the code you want to run should have the name main.py. If there is no such file in the repository, Meiro will return an error in the activity log.

If your code is too long, consider splitting it into modules - logical blocks and functions. We advise you to think through the structure of the project as all your modules should be accessible through the script file main.py.

Installing packages

Python from Git repository processor comes pre-installed with the most common packages, listed in the Features section above. However, if you need additional packages, it is possible to install them.

Meiro uses pip utility to install packages. To do this you need to create a requirement file named requirements.txt, generally, all the packages available on pypi.org can be installed. You need to list the names of the packages and their versions (if needed) in the file requirements.txt in your repository. If versions are not given, the latest versions will be installed.

Paths

Use absolute paths when you need to access data files in your script.

Input files

- an absolute path

/data/in/tables/..and/data/in/files/..

Output files

- an absolute path

/data/out/tables/..and/data/out/files/..

Standard output

The analog console log in Meiro is the activity log, where you can view the result of running your script including errors and exceptions.

Other requirements

When working with dataflow, you will need to work with files and tables a lot. Generally, you will need to open the input file, transform the data and write it to the output file. There are no special requirements to the script except the ones mentioned above in this section and the Python syntax itself.

You can check a few examples of scripts solving common tasks in the section below.

Examples

Example 1

This example illustrates a simple code that imports an open dataset from an external source, writes it to an output file, and prints a standard output to the console log. Usually, you will need to open the file from the input bucket, which was downloaded using a connector component, but in some cases, requesting the data from external resources may be necessary.

Additionally, this example demonstrates how the parameters feature can be applied. In this example, the URL of the dataset and its path and name are saved in the parameters property of the config.json file. All these values can easily be changed without changing the script in the repository.

Parameters

{

"url": "http://web.stanford.edu/class/archive/cs/cs109/cs109.1166/stuff/titanic.csv",

"path": "newfolder",

"name": "titanic.csv"

}

Script (main.py in Git repository)

#import necessary libraries

import requests

import json

import pandas as pd

from pathlib import Path

#obtain url property from config.json file

with open('/data/config.json') as f:

json_data = json.load(f)

url = json_data['parameters']['url']

path = json_data['parameters']['path']

name = json_data['parameters']['name']

#request url and save response into variable

r = requests.get(url)

#create full path for the file, make parent directory

full_path = '/data/out/tables/' + path + "/" + name

Path('/data/out/tables/' + path).mkdir(parents=True, exist_ok=True)

#create or open (if exists) output file for writing and write the content of the response

with open(full_path, 'wb') as f:

f.write(r.content)

#read output file and print to console log first 10 rows

data = pd.read_csv(full_path, header=0)

print(data.loc[0:10,["Name", "Survived"]])

Example 2

This example illustrates opening, filtering and writing a CSV file using pandas, which is one of the most common Python libraries for data analytics. In this script, we’ll use the Titanic dataset which contains data of about 887 of the real Titanic passengers. This dataset is open and very common in data analytics and data science courses.

Let’s imagine we need to analyze the data of male and female passengers separately and want to write the data in 2 separate files. Data in this example was previously downloaded using a Connector component. We will show you how you can reproduce the code on your computer below.

Script (main.py in Git repository)

#import pandas library

import pandas as pd

#read data from file and keep it in data variable

data = pd.read_csv('/data/in/tables/titanic.csv')

#filter rows with male and female passengers, keep data in variables

data_male = data[data['Sex']=='male']

data_female = data[data['Sex']!='male']

#reset indexes to each dataset so it starts from 0 and grows incrementally

data_male.reset_index(drop=True, inplace=True)

data_female.reset_index(drop=True, inplace=True)

#write data to output files

data_female.to_csv('/data/out/tables/titanic_filter_female.csv')

data_male.to_csv('/data/out/tables/titanic_filter_male.csv')Reproducing and debugging

If you want to reproduce running the code on your computer for testing and debugging, or you want to write the script in a local IDE and copy-paste it in Meiro configuration, the easiest way to do this would be to reproduce the folder structure as below:

/data

/in

/tables

/files

/out

/tables

/files

/repository

main.py

requirements.txtInput files and tables should be located in the folder in/ in the corresponding subfolders, output files, and tables in out/files and out/tables respectively.

To recreate Example 2, you will need to download the dataset and save it to the folder /data/in/tables as titanic.csv, paste the code from the example to the script file in the repository folder and run it. New files will be written to the folder /data/out/tables/. We draw your attention to the fact that in both the examples, absolute paths are used because of the specifics of Python from Git Repository processor.