Loader Kafka

Loader Kafka sends input data as messages to Kafka brokers.

The component can produce two types of message payloads

-

JSON message payloads

-

Avro message payloads

The component builds upon the Python package for Kafka client. Specifically C/C++ implementation of librdkafka and confluent-python module.

In terms of authentication and communication encryption the component supports the following modes of operation

-

PLAINTEXT: No authentication and no encryption

-

SSL: Authentication and broker communication encryption using SSL/TLS certificates

-

SASL_SSL: Authentication using Kerberos (GSSAPI) with encryption using SSL/TLS certificates

-

SASL_PLAINTEXT: Authentication using Kerberos (GSSAPI) without communication encryption

Visit the relevant section of documentation as configuration parameters might have specific meaning or behavior depending on message payload type or authentication scheme

Warning: Known Limitations:

* The topic must exist prior to loading. The component does not create a target topic automatically.

*

The component only supports schema discovery and message production for Avro payloads.

*

Avro schemas are only supported for message values, not for message keys

*

The component was tested against the Confluent Kafka Schema Registry. Other registry implementations were not tested but may work.

Data In/Data Out

| Data In |

The component reads the configuration, loads the data from the input files and then sends data to the Kafka server. Files that need to be uploaded should be in the folder The format of files should be newline delimited JSON files. |

| Data Out | N/A |

Learn more: about the folder structure here.

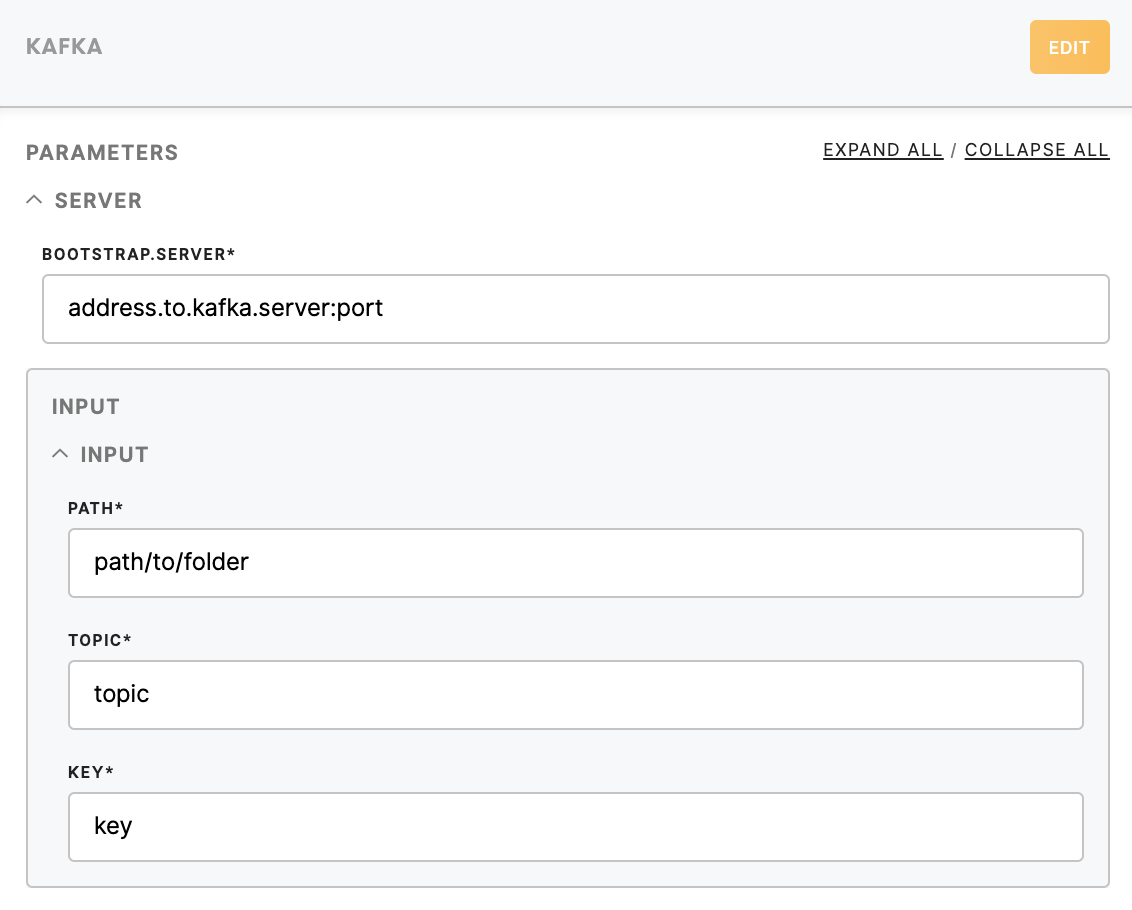

Parameters

| Bootstrap.Server (required) | Kafka's server address. |

| Path (required) | Path to data files. Example: *.data/in/files/folder_name/file_name.ndjson |

| Topic (required) | Name of topic in Kafka for which you want to assign loaded files. |

| Key (required) | A specific key name will be used in the data file. The key will be used in Kafka. |